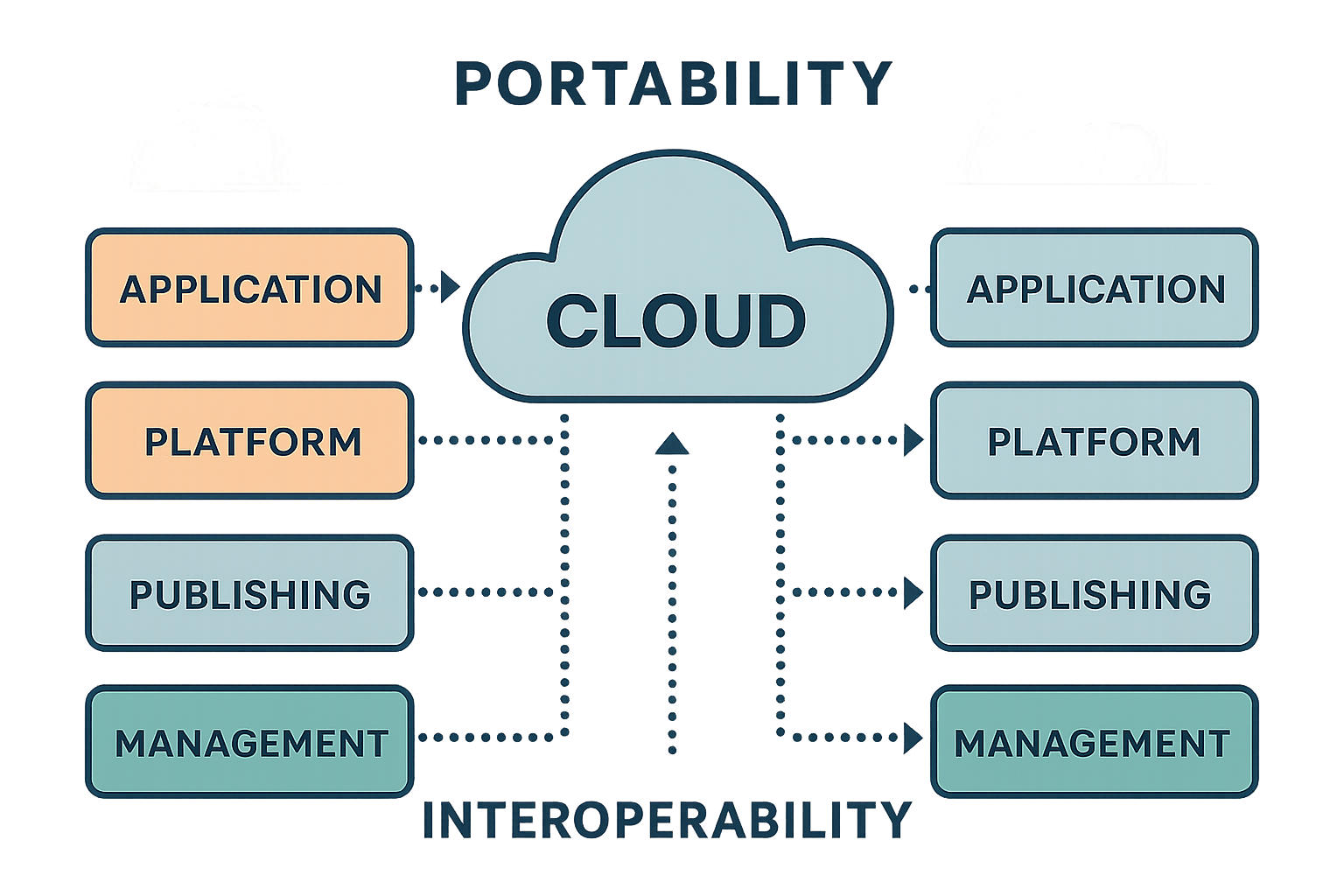

“Cloud portability is a key factor in reducing vendor lock-in and enabling freedom of choice across cloud providers. From containerized applications to storage virtualization and data abstraction, organizations can design architectures that work across AWS, Azure, and Google Cloud. This post explores the challenges of specialized resources and the strategies that make true interoperability possible.”

Table of Contents

Cloud Portability and Specialized Resources (Lock-In)

Many public cloud providers have started introducing specialized cloud resources that are native to a specific cloud platform and not portable to other cloud environments.

This chapter focuses specifically on public cloud because, in the author’s view, the introduction of proprietary cloud-native specialized resources undermines one of the fundamental potential benefits of cloud computing: portability.

In a private cloud or traditional data center, the model typically involves deploying applications on virtualized host systems based on well-known operating systems, using service models comparable to IaaS or PaaS.

With the widespread adoption of containerization, which is dominant in cloud-native ecosystems, portability—the ability to run the same container across different cloud environments—has become relatively feasible, especially when Platform Engineering, Infrastructure as Code (IaC), and DevOps practices are applied.

In this model, the source code inside the container remains agnostic of the lower ISO/OSI layers through which it executes. Ideally, developers writing the source code should also be unaware of these underlying layers.

This approach enables portability via automated DevOps deployment pipelines, which are discussed in detail later in this book.

However, SaaS solutions have always been cloud-native and inherently lack portability. Examples include Microsoft 365 (formerly Office 365) and Google Workspace, which enable integration and interoperability but do not facilitate migration between cloud providers.

For example, if an enterprise builds micro-automation and computational processes around Microsoft 365 or Google Workspace, data migration complexity increases significantly, leading to potential loss of information.

AWS S3 as a Notable Exception

AWS S3, which originated as a native storage service within the Amazon cloud ecosystem, has evolved into a widely adopted storage standard.

Thanks to third-party virtualization software libraries, AWS S3 storage mechanisms can now be used outside of Amazon’s cloud environment, making it one of the rare cloud resources that can transcend provider boundaries.

Cloud Providers and Data Lock-In

Today, the primary focus in cloud computing revolves around data management, and major cloud providers actively work to keep data within their own ecosystems.

The reason is simple: data lifecycle management drives the highest consumption of fundamental cloud resources such as compute, storage, and data transfer.

With the rise of Generative AI (Gen AI) services, both data consumption and cloud dependency have increased. As a result, cloud providers now offer highly specialized SaaS/PaaS cloud-native resources.

Three notable examples of cloud-specific services include:

- Google BigQuery (Google Cloud Platform – GCP)

- Microsoft Fabric(Azure)

- AWS Glue (Amazon Web Services – AWS)

Currently, there is no portability between solutions such as BigQuery on GCP and Fabric on Azure, or vice versa. This lack of interoperability results in vendor lock-in, forcing businesses to commit to a specific cloud ecosystem.

Cloud Resource Classification: Beyond Service and Distribution Models

To accurately classify cloud resources, portability must be considered in addition to service model and distribution model.

Can Modern Architectures Reduce Lock-In?

The answer is yes, but not for all scenarios.

Later in a next post, we will explore architectural strategies that can minimize dependence on a single cloud provider.

But we can anticipate a core-based strategy to enable the cloud portability: use a Virtualizing ISO/OSI Storage Layers strategy.

While containers enable software portability, ensuring data portability requires a similar approach.

The key is to virtualize the ISO/OSI layers responsible for data storage and adopt an abstraction model that decouples data storage from data lifecycle management.

By implementing layered abstractions, organizations can design virtualized ecosystems where both software and data operate independently from the underlying cloud infrastructure.

Holistic Vision

Cloud portability is more than a technical choice—it is a strategic foundation for building resilient and future-proof ecosystems. Specialized resources may offer innovation, but they also increase dependency and risk. By combining containerization, storage abstraction, and modern DevOps practices, organizations can strike a balance between leveraging advanced cloud-native services and preserving the freedom to evolve across providers. In this perspective, portability is not only about moving workloads—it is about safeguarding autonomy, enabling innovation, and ensuring that both human and AI-driven systems can thrive in a truly interoperable digital ecosystem.

References

This article is an excerpt from the book

Cloud-Native Ecosystems

A Living Link — Technology, Organization, and Innovation

Leave a Reply