The computational capacity evolution is marked by continuous miniaturization, coupled with exponential increases in computational capacity and energy efficiency. This journey has taken us from room-sized mainframes to modern smartwatches, passing through electronic calculators, portable personal computers, and mobile phones.

Table of Contents

Computational Capacity Evolution

At the dawn of the computing era, around the 1920s, computational machines required significant physical space.

Computation was not shared but confined to the physical location where the calculations were performed.

Data storage was an arduous task.

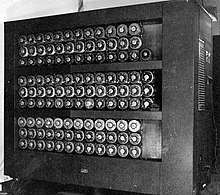

A good example, referenced in the 1940s-era film The Imitation Game, is the depiction of Alan Turing and Tommy Flowers building the first electromechanical computers (in fact, there were two: Colossus, which decrypted the German Enigma machine, and Bombe, which simulated Enigma itself, effectively acting as a test environment for Enigma!).

Fast-forwarding 10 years (keeping Moore’s Law in mind), we arrive at 1951, when UNIVAC I was introduced. UNIVAC I1 was the first American computer specifically designed from the outset for business and administrative use, enabling the rapid execution of relatively simple arithmetic and data transfer operations compared to the complex numerical calculations required by scientific computers.

UNIVAC, I consumed approximately 125,000 watts of power (TDP). This computer used 6,103 vacuum tubes, weighed 7.6 tons, and could execute about 1,905 operations per second (TOPS) with a clock speed of 2.25 MHz.

It required approximately 35.5 square meters (382 square feet) of space.

It could read 7,200 decimal digits per second (as it did not use binary numbers), making it by far the fastest business machine ever built at the time.

UNIVAC, I featured a central processing unit (CPU), memory, input/output devices, and a separate console for operators (Von Neumann approves!).

Let’s now take a brief journey through the years to explore the evolution of computing devices.

Mainframes (1960s–1970s):

- Weight and Dimensions: Mainframes occupied entire rooms and weighed several tons.

- Energy Consumption: They could consume up to 1 megawatt (1,000,000 watts) of power.

- Computational Capacity: Measured in millions of instructions per second (MIPS); for example, the Cray-1 in 1976 achieved 160 MIPS.

Electronic Calculators (1970s):

- Weight and Dimensions: Early electronic calculators weighed around 1–2 kg and were the size of a book.

- Energy Consumption: Powered by batteries or mains electricity, consuming only a few watts.

- Computational Capacity: Limited to basic arithmetic operations, with speeds in the range of a few operations per second.

Portable Personal Computers (1980s–1990s):

- Weight and Dimensions: Early laptops weighed between 4 and 7 kg, with significant thickness.

- Energy Consumption: Battery-powered with limited autonomy, consuming tens of watts.

- Computational Capacity: CPUs with clock speeds between 4.77 MHz (e.g., IBM 5155, 1984) and 100 MHz, capable of executing hundreds of thousands of instructions per second.

Mobile Phones (2000s):

- Weight and Dimensions: Devices weighed between 100 and 200 grams, easily portable.

- Energy Consumption: Rechargeable batteries with capacities between 800 and 1500 mAh, consuming only a few watts.

- Computational Capacity: Processors with clock speeds between 100 MHz and 1 GHz, capable of millions of instructions per second, supporting applications beyond simple voice communication.

Smartwatches (2010–Present):

- Weight and Dimensions: Devices weigh between 30 and 50 grams, with screens just a few square centimeters.

- Energy Consumption: Batteries with capacities between 200 and 400 mAh, optimized for minimal energy consumption.

- Computational Capacity: Processors with clock speeds between 1 and 2 GHz, capable of running complex applications, health monitoring, and advanced connectivity.

Computational Capacity Today

Before diving into today’s computational capacity, we need to establish a standard measurement scale for computational performance: FLOPS (Floating Point Operations Per Second) is the basic unit of measurement for floating-point operations performed in one second.

- Kiloflops (KFLOPS):

- 1 Kiloflop = 10³ FLOPS

- Computational capacity of computers from the 1960s.

- Megaflops (MFLOPS):

- 1 Megaflop = 10⁶ FLOPS

- Computational capacity of computers from the 1980s.

- Gigaflops (GFLOPS):

- 1 Gigaflop = 10⁹ FLOPS

- Computational capacity of mid-range CPUs and GPUs in the early 2000s.

- Teraflops (TFLOPS):

- 1 Teraflop = 10¹² FLOPS

- Computational capacity of modern GPUs and advanced supercomputers.

- Petaflops (PFLOPS):

- 1 Petaflop = 10¹⁵ FLOPS

- Achieved by supercomputers in 2008, such as the Roadrunner.

- Exaflops (EFLOPS):

- 1 Exaflop = 10¹⁸ FLOPS

- Computational capacity reached by the most advanced supercomputers, such as Frontier (2022).

- Zettaflops (ZFLOPS) (currently theoretical):

- 1 Zettaflop = 10²¹ FLOPS

- Considered the future of computing, necessary for fully simulating complex systems like the human brain.

- Yottaflops (YFLOPS) (currently theoretical):

- 1 Yottaflop = 10²⁴ FLOPS

- A hypothetical level of computation for technologies yet to be realized.

The Apple A15 Bionic chip weighs just a few milligrams, has a clock speed of 3.1 GHz, can execute 15.8 trillion arithmetic operations per second (TOPS), and consumes only 6 watts (TDP).

UNIVAC I, by comparison, used 6,103 vacuum tubes, weighed 7.6 tons, and could execute approximately 1,905 operations per second (TOPS) with a clock speed of 2.25 MHz.

The A15 Bionic is not the fastest chip in the world.a

The NVIDIA Blackwell B200 is currently the most powerful chip in the world, with a speed of 20 Petaflops, meaning 20,000,000 trillion operations per second.

It consumes 1,000 watts (TDP).

Modern technology has applied techniques that enable multiple boards or motherboards to function simultaneously, utilizing specially designed high-speed buses.

This represents an application of Moore’s Law, not only in the miniaturization of individual chips but also in their aggregation into systems of interconnected boards dedicated to computation.

However, certain physical factors limit scalability, with heat dissipation being the most critical constraint.

Over the years, the ability to aggregate computational power and store the resulting data has become a defining factor in the technological and economic strength of nations and entire continents. This aggregation is especially impactful in the scientific domain globally.

For example, consider the computational capacity required at CERN in Geneva to process the high-energy particle collisions conducted in its laboratories, or the processing power needed to create the first image of a black hole. On a more routine level, computational capacity is essential for weather forecasting, stock market predictions, and autonomous vehicle navigation.

Computational capacity originally emerged as a concept of a central computer receiving instructions from terminals and distributing calculation results to various devices (terminals, printers).

This model is far from obsolete. It remains highly relevant today, especially in the operation of the world’s few supercomputers, which have evolved into super data centers (vast server farms).

Owning one of these large data centers has also become a matter of geopolitical positioning for nations. The presence or absence of such infrastructure enables countries to lead in critical scientific and military fields.

oday, NVIDIA offers a data center solution with the DGX SuperPOD system: a setup composed of 127 DGX B200 systems, each hosting 36 NVIDIA Grace CPUs and 72 NVIDIA Blackwell CPUs.

Let’s hypothesize the energy consumption based on the previously mentioned data for a single Blackwell B200 chip!

This configuration reaches computational capacities measured in Exaflops.

Currently, the world’s leading modern supercomputing data centers are oriented towards providing computational capacities measured in hundreds of Exaflops (1 Exaflop = 1,000,000,000,000,000,000 FLOPS). This progress has been achieved starting from the 1950s to today.

A similar trend can also be observed in data storage capacity.

High cloud capacity in Italy

In Italy, some significant advancements are underway. The “Leonardo” supercomputer, located at the Tecnopolo in Bologna and managed by the CINECA consortium, was inaugurated in November 2022. Leonardo is an Atos BullSequana XH2000 system, equipped with nearly 14,000 Nvidia Ampere GPUs and a peak capacity of 250 Petaflops.

Additionally, the Italian startup iGenius has announced a collaboration with NVIDIA to build “Colosseum”, one of the world’s largest supercomputers based on the NVIDIA DGX SuperPOD. This data center, located in southern Italy, will house approximately 80 NVIDIA GB200 NVL72 servers, each equipped with 72 “Blackwell” chips.

The project, expected to be operational by mid-2025, aims to develop open-source artificial intelligence models for highly regulated sectors such as banking and healthcare see on

Italian startup iGenius and Nvidia to build major AI system | Reuters

A further example of innovation in sustainable high-performance computing comes from Intacture – Trentin Data Mine, an Italian project designed to combine massive computational power with radical energy efficiency. Unlike traditional supercomputing centers, Intacture is built inside a former mining facility, taking advantage of natural cooling conditions and renewable energy sources. The initiative aims to create one of the most energy-efficient computing farms in Europe, capable of supporting artificial intelligence workloads, scientific simulations, and financial modeling while minimizing its carbon footprint. This approach not only reduces operational costs but also demonstrates how the next generation of data centers can merge ecological responsibility with technological excellence. More details about the project can be found here: https://www.intacture.com.

Holistic Vision

The story of computational capacity is, at its core, the story of humanity’s relentless pursuit of efficiency, speed, and scale. From the massive machines that filled entire rooms in the 1940s to today’s chips weighing only a few grams yet performing trillions of operations per second, the trajectory has been nothing short of extraordinary. Each leap forward has redefined not only how we compute but also how we live, work, and organize society.

Yet this progress comes with new responsibilities. The growth of computational capacity now intersects with global challenges such as sustainability, energy consumption, and geopolitical competition. Supercomputers and AI data centers have become strategic assets, as vital as oil reserves or transportation networks once were.

Looking ahead, the race toward exascale and beyond—to zetta- and yotta-scale computing—will demand not only technical ingenuity but also bold choices in energy management and international collaboration. Projects like Italy’s Leonardo, the upcoming Colosseum, and sustainable initiatives such as Intacture – Trentin Data Mine highlight the dual imperative of power and responsibility: to build computing capacity that is both transformative and sustainable.

The journey from UNIVAC I to NVIDIA’s Blackwell and beyond is far from over. It is a reminder that the future of computing will not only be measured in FLOPS, but also in how wisely we harness that power for the benefit of humanity.

References

This article is an excerpt from the book

Cloud-Native Ecosystems

A Living Link — Technology, Organization, and Innovation