In today’s digital world, the cloud is often perceived as an abstract concept, hidden behind the simplicity of a web interface. Yet, behind every click, there is a vast and complex infrastructure made of data centers, high-speed connections, and advanced virtualization technologies. In this article, adapted from my book Exploring Cloud-Native Ecosystems, we’ll explore the physical and logical foundations of the cloud to understand how it is truly built and how it works.

Table of Contents

How the Cloud is Built and How It Works | Essential Guide to Cloud Infrastructure & Digital Transformation

The widespread adoption of cloud computing, as detailed in my post Cloud Adoption, would not have been possible without several enabling industrial factors:

- The expansion of a stable, high-speed, and highly available global network infrastructure.

- The exponential growth of computational capacity per unit of physical space, along with a reduction in equivalent energy consumption.

- The evolution of computational models.

We have seen that the cloud can be described through its service models and distribution models, presenting itself as a ready-to-use service for consumers.

We have also seen how cloud resources, and therefore the entire cloud, can be summarized into a few key elements: computational power, data storage, and data transport.

Moreover, we have seen that these characteristics are enabled by specific electronic devices.

In reality, the cloud consists of all these components—just on a much larger scale.

Whether public or private, cloud services are delivered through a vast network of data centers distributed worldwide, managed directly by public cloud providers.

Each data center contains enormous stacks of computing units, such as the DGX SuperPOD (though not all of them 😊)

What is Inside a Cloud Data Center?

A cloud data center is a facility that can span vast physical dimensions, as shown in Figure.

Inside a cloud data center, we find rows of specialized servers neatly stored inside rack enclosures—tall, standardized metal cabinets designed to house multiple computing units in a compact and organized manner.

Unlike traditional office computers, which typically have keyboards, monitors, and user interfaces for direct interaction, cloud servers are headless meaning they lack direct input/output devices. Instead, they are designed for remote management and automated operation, ensuring maximum efficiency and scalability.

Each server rack contains:

- Motherboards with powerful multi-core processors (CPUs & GPUs) optimized for parallel workloads.

- High-speed RAM (memory modules) to handle intensive data processing.

- Storage devices (HDDs, SSDs, or NVMe drives) that provide ultra-fast access to data.

- Network interface cards (NICs) that allow high-speed communication with other servers.

- Redundant power supply units (PSUs) to ensure continuous operation.

To enable seamless operation across thousands of machines, these rack-mounted servers are interconnected through high-speed data buses, forming a massively parallel computing environment.

Key technologies enabling communication within a cloud data center include:

- Backplane Bus Systems

- Each rack has an integrated backplane—a high-speed communication backbone that interconnects all servers within the same cabinet.

- High-Speed Network Switching

- Servers are connected via fiber-optic networking switches, enabling low-latency data exchange between different racks and clusters.

- Software-Defined Networking (SDN)

- Instead of relying on traditional manual network configurations, cloud providers use software-defined networking, which allows dynamic traffic routing and load balancing across the entire data center.

- Inter-Rack Optical Links

- Since cloud computing requires extreme bandwidth, data is transmitted using fiber-optic cables inside the data center, connecting racks at speeds of 100 Gbps or higher.

- Distributed Storage Systems

- Cloud servers don’t store data locally like personal computers. Instead, they access a distributed storage layer that spans multiple racks and even multiple data centers, ensuring redundancy and fault tolerance.

How These Servers Work Together

Each server in a rack is not an isolated unit but part of a cluster, working together to handle massive computational workloads. Cloud data centers are architected using the concept of hyperscale computing, meaning:

- Workloads are dynamically distributed across multiple physical machines.

- A single task (e.g., processing an AI model or serving a website) may run across dozens or even hundreds of servers simultaneously.

- If one server fails, its workload is automatically shifted to another available machine, ensuring continuous service availability.

The Role of Virtualization and Containers

Each server in a rack is not an isolated unit but part of a cluster, working together to handle massive computational workloads. Cloud data centers are architected using the concept of hyperscale computing, meaning:

- Workloads are dynamically distributed across multiple physical machines.

- A single task (e.g., processing an AI model or serving a website) may run across dozens or even hundreds of servers simultaneously.

- If one server fails, its workload is automatically shifted to another available machine, ensuring continuous service availability.

The Importance of Rack Density & Cooling

Because cloud data centers must pack thousands of high-performance servers into a limited space, rack density is a critical factor. Modern high-density racks can house:

- 40 to 60 blade servers per rack

- Up to 10,000 CPU cores per data hall

This extreme density generates massive amounts of heat, requiring advanced cooling technologies, including:

- Liquid cooling solutions that circulate coolant to dissipate heat.

- Hot aisle / cold aisle configurations to optimize airflow and prevent overheating.

- AI-powered energy management to dynamically adjust cooling based on real-time workloads.

Geographical Distribution of the Cloud.

The geographical distribution of data centers is a key factor in service quality. Over time, alongside massive data centers, edge data centers and modular data centers have been introduced.

A modular data center can be expanded over time by adding new units to increase computing power. This strategy is widely used by cloud providers offering public cloud services in newly developing areas, ensuring low-latency service for a limited set of cloud resources.

However, as you might expect, the computing power of a modular container-based data center (as shown in Figure 26) cannot match that of a large-scale data center (as shown in Figure 24).

The geographical distribution of cloud providers’ data centers follows a two-tiered structure:

- Consumers see only the service delivery regions (referred to as regions).

- Each region consists of multiple redundant data centers providing high availability at the regional level.

Cloud providers do not disclose the exact physical location of data centers, mainly for security reasons.

However, users can explore the cloud providers’ regional maps, such as:

- Microsoft Azure: Azure Global Infrastructure

- Amazon Web Services (AWS): AWS Global Infrastructure

- Google Cloud: Google Cloud Locations

Regions, once created, gradually expand with additional cloud resources over time.

The time required to establish a new region depends on the regulatory frameworks of the host country where the data centers for that region are located.

Due to legislative constraints, data centers must first comply with national regulations before adhering to international standards.

As a result, each cloud region is effectively tied to data centers within a single country.

The creation of a new region does not immediately guarantee the availability of all cloud resources present in a long-established region.

The cloud resource availability map for each region enables the analysis of two critical factors:

- Cost control – Identifying available resources within a specific region helps optimize expenses, reducing unnecessary data transfers and avoiding unexpected costs.

- Legal risk assessment – If a required cloud resource is unavailable in the designated national region or outside the compliance perimeter dictated by regulations, it may introduce regulatory and compliance risks.

Moreover, data traffic between different regions, even when hosted within the same public or private cloud, can lead to higher operational costs, making strategic regional resource planning essential for both financial efficiency and regulatory compliance.

What Is the Cloud Made Of?

What materials are used in cloud computing?

From a materials science perspective, a server farm consists of various materials used in electronic components, network infrastructure, and cooling systems.

Key Materials Used in Cloud Infrastructure:

1. Metals and Minerals:

- Silicon – Used for semiconductors and processor chips.

- Copper – Used in wiring and circuit boards due to its high electrical conductivity.

- Aluminum – Used for server chassis and heat sinks.

- Gold – Used in connector plating to prevent corrosion.

- Nickel & Cobalt – Used in batteries and electronic components.

- Rare Earth Elements – Used in hard disk magnets and high-performance electronics.

2. Cooling Systems:

- Water – Used in liquid cooling systems for data centers.

- Plastic Pipes – Used for cooling distribution systems.

- Refrigerants – Special chemical compounds used in high-efficiency air conditioning.

3. Power and Storage Technologies:

- Lead & Sulfuric Acid – Used in UPS backup batteries (Uninterruptible Power Supply).

- Lithium – Used in modern lithium-ion batteries for energy storage.

- Ferromagnetic Materials – Used in transformers and voltage regulators.

4. Structural and Environmental Materials:

- Concrete & Steel – Used to construct data center buildings.

- Thermal Insulation Materials – Used to maintain temperature stability.

- Lightweight Alloys – Used for server racks.

5. Sustainable Energy Materials:

- Solar Panels – Made from silicon and other semiconductors to provide renewable energy.

- Eco-friendly Materials – Used in new green data centers to minimize environmental impact.

These materials are essential for constructing and operating cloud data centers, which house thousands of servers running in a stable and energy-efficient environment.

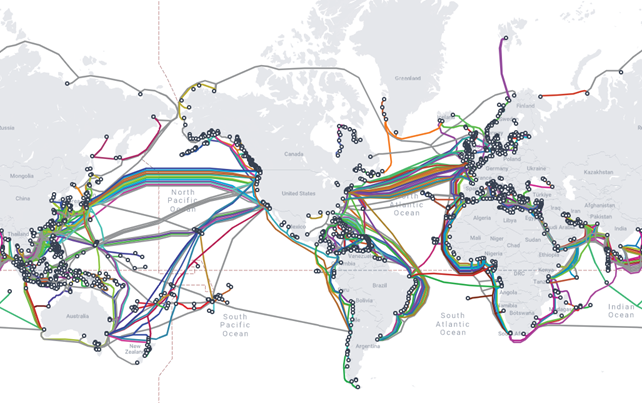

The Critical Role of Communication Infrastructure in the Cloud

One of the key challenges of cloud computing is its underlying communication infrastructure.

In today’s world, the widespread availability of broadband connections has enabled millions of people to continue working remotely during the COVID-19 pandemic. It is clear that without high-speed, large-scale connectivity, this transition would not have been possible.

I live in Italy, in a town where broadband has been deployed, but it has not yet reached every street—a “no man’s land” where no one intervenes. As a result, my neighbor, just 50 meters away, has full broadband access, while my family does not. (That said, with 60 Mbps download speed, we don’t face too many issues! 😊)

The Cloud’s Dependency on Communication Infrastructure

Public cloud services rely heavily on data transport capabilities—both in terms of infrastructure capacity and global and local network integration.

At the lower layers of the ISO/OSI stack, we find the telecommunications carriers that facilitate global data exchanges.

Let’s take, for example, data transmission across the Atlantic Ocean, which connects Europe and the United States.

This massive undersea communication backbone is built on fiber-optic submarine cables, utilizing Dense Wavelength Division Multiplexing (DWDM) technology. DWDM allows multiple data channels to travel through the same fiber, using different wavelengths, significantly boosting bandwidth efficiency.

Cloud Providers and Network Connectivity

To ensure seamless and reliable connectivity, cloud service providers leverage a mix of:

- Global network providers

- Data transport service providers

- Internet connectivity providers

Many cloud vendors implement hybrid network solutions, combining their own private infrastructure with the existing telecommunications networks of local providers.

A prime example is the MAREA cable, a joint project between Microsoft, Facebook, and Telxius. MAREA is one of the most powerful transatlantic cables, boasting a data transport capacity of 160 terabits per second.

The Strategic Importance of Interconnection Infrastructures

These interconnection infrastructures are not just essential for commercial cloud services—they are strategic assets for national security as well.

Most of these critical network infrastructures are designed and managed by private companies. However, governments retain some level of control over their operation, particularly when it comes to critical security configurations.

For a deeper dive into the role of submarine cables in global internet connectivity, you can check out GeoPop’s Italian-language YouTube video: CAVI SOTTOMARINI – la fibra ottica del mondo passa in fondo agli oceani, altro che satelliti – Ep-1 (youtube.com)

Global network of submarine internet cables (from https://www.submarinecablemap.com/)

Conclusion

The cloud is not magic, but the outcome of decades of technological progress and cultural transformation. By uncovering its inner workings—from industrial enablers to global networks—we gain the tools to navigate digital transformation with awareness. The better we understand its foundations, the better we can design, govern, and innovate our future cloud-native ecosystems.

References

This article is an excerpt from the book

Cloud-Native Ecosystems

A Living Link — Technology, Organization, and Innovation